Welcome to this lesson on adjusting LLM's messages. Today, we will focus on refining LLM responses to achieve more accurate and useful outputs. By learning how to modify LLM messages, you can enhance the quality of interactions and ensure that the responses meet your specific needs.

To guide LLMs in producing structured responses, it is crucial to define the desired answer format. This involves specifying constraints that the LLM should follow. For example, if you want a list of teaching strategies, you should clearly state that in your prompt. This helps the LLM understand the structure you expect in its response.

Consider the following prompt:

By specifying that you want a "list" and an "overview," you are setting clear expectations for the LLM's response format. However, this is not specific enough. Let's consider a sample output of LLM:

A part of the output is omitted for clarity. This output is not what we expected: it includes implementation details we didn't ask for. Also, we only want to see active learning strategies.

One approach is to introduce a constraints section with many specific instructions. Let's take a look at a more efficient approach.

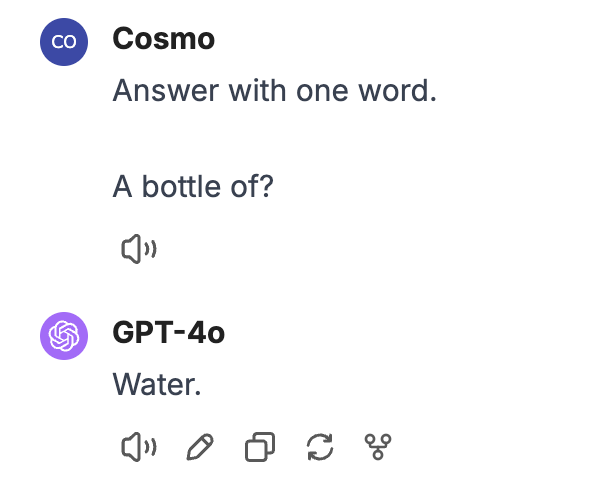

Let's consider this dialogue.

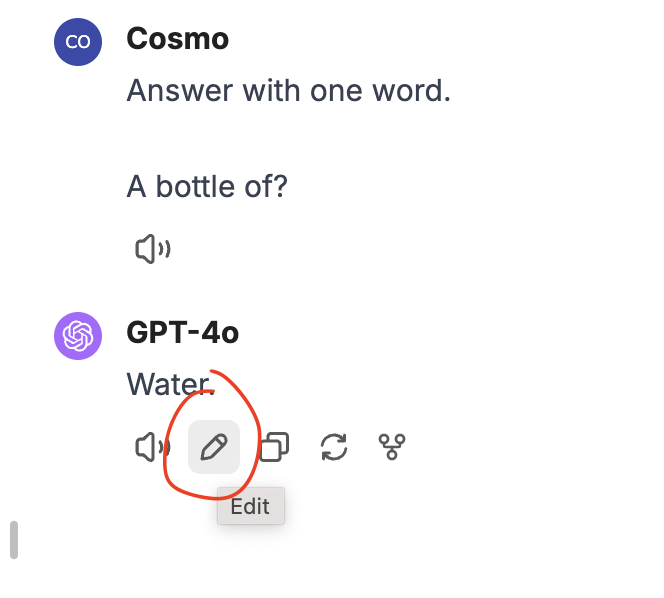

You can modify the LLM's answer using the edit button:

Modern chat-based LLM works as follows: it takes the whole previous dialogue and predicts the following reply. This means that even slight modifications to previous responses can significantly impact subsequent outputs. If an LLM's response includes unnecessary details, restructuring the answer before continuing the dialogue ensures that future responses adhere more closely to the desired format. However, one potential limitation is that changes made to the response do not "erase" the model's original reasoning—they only shift its interpretation for future responses. If inconsistencies persist, resetting the conversation might be necessary.

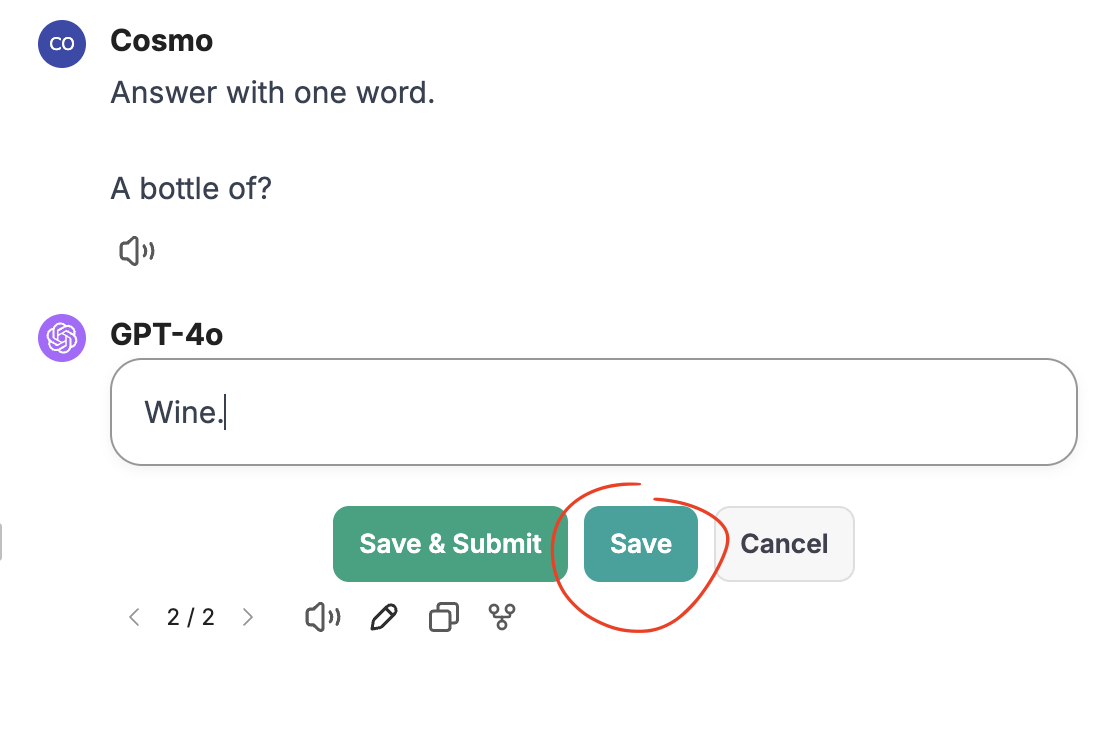

Once you have modified the answer, save it:

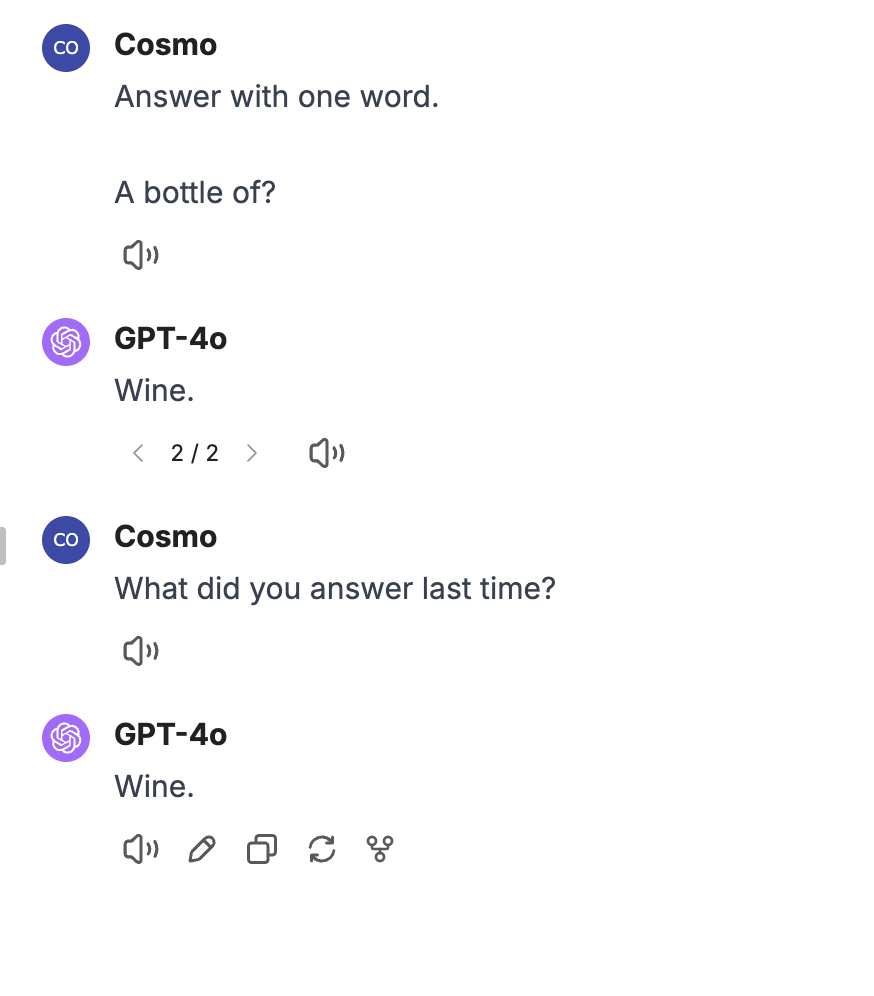

Let's test if LLM treats the new version of the answer as its answer:

Great!

When the initial response from an LLM doesn't fully meet your expectations, you can manually adjust the response to better fit the desired format. This process improves the clarity of the response and helps the LLM understand the task more effectively. When you ask the next question, LLM will treat the modified answer as its initial answer and is likely to answer using the same format.

For instance, let's reorganize the LLM's answer in the following way:

By removing initial context, implementation details, and other strategy types, we implicitly communicate the desired structure to the LLM.

Now, let's ask the following question in the same dialogue. For instance, we can run this prompt:

And here is the LLM`s answer:

Note how LLM answers in the desired format without specifying any additional constraints. By continuing the dialogue and refining the LLM's output, you can guide the LLM to better meet your needs.

Note: this approach can't guarantee the correct format. But it is usually faster than explicitly writing down all the constraints so that it can be very efficient, especially for lesson planning and resource creation. We will see more of such examples in the practice session.

In this lesson, we covered the importance of defining the desired answer format and how to modify LLM responses for clarity. We also explored how continued dialogue can implicitly communicate constraints to the LLM. By practicing these techniques, you can enhance the quality of interactions with LLMs for your teaching needs.

As you move on to the practice exercises, remember that hands-on experience is key to mastering prompt engineering. Use the skills you've learned to refine LLM responses and achieve the desired outcomes for your classroom. Good luck!