After prioritizing your opportunities through RICE scoring, Kano classification, and Opportunity Solution Trees, you now face the moment of truth: will your carefully selected solutions actually deliver the value you predicted? The frameworks you've mastered for convergent thinking helped narrow dozens of possibilities into a focused roadmap, but even the most rigorous prioritization can't eliminate uncertainty about user behavior and market response. This lesson equips you with the mindset and methods to validate assumptions quickly, learn from real user interactions, and adapt your product direction based on evidence rather than intuition.

The transition from planning to validation represents a fundamental shift in how you operate as a Product Manager. Where prioritization frameworks dealt with theoretical value and projected impact, validation confronts you with messy reality—users who behave unexpectedly, technical constraints that emerge mid-build, and metrics that refuse to move despite your best hypotheses. Throughout this unit, you'll learn to embrace this uncertainty through disciplined experimentation, treating every feature release not as a final answer but as a question posed to the market. The ability to design lean experiments, interpret ambiguous results, and pivot quickly based on learnings separates Product Managers who ship features from those who drive meaningful outcomes.

The Minimum Viable Product (MVP) concept has become so ubiquitous in product development that its true purpose often gets lost. An MVP isn't simply a stripped-down version of your final vision or a way to ship something quickly—it's a learning instrument designed to test your riskiest assumption with the least investment. When you scope an MVP correctly, you're building just enough to answer a critical question about user behavior, market demand, or technical feasibility. This discipline prevents you from investing months building elaborate features that users ultimately reject.

Understanding what makes a product minimum yet still viable requires careful balance. Minimum means eliminating every feature, design polish, and technical sophistication that doesn't directly contribute to testing your core assumption. For instance, if you're testing whether users will share workout commitments for accountability, you don't need a beautiful interface, sophisticated matching algorithms, or gamification—you need the bare ability to make and share a commitment. Yet the product must remain viable, meaning users can actually complete the core job you're testing. An accountability feature that crashes when users try to share defeats the experiment's purpose, regardless of how minimal it is.

The most effective MVPs isolate a single critical assumption for validation. Consider a Product Manager testing whether automated expense categorization will reduce small business accounting time. The critical assumption isn't whether the AI can achieve 95% accuracy—it's whether users trust automated categorization enough to rely on it. Therefore, the MVP might use simple rule-based categorization achieving only 70% accuracy, but if users won't even try the feature, you've saved months of ML model training. This focused approach to assumption testing transforms MVPs from rushed, half-baked products into precise experimental instruments.

Scoping an MVP requires brutal prioritization conversations with your team. When your designer insists that "users expect polish in 2024" and your engineer argues "we can't ship without proper error handling," you must distinguish between what's necessary for learning and what's necessary for launch. A useful framework for these debates involves asking: "If this element were broken or missing, would it invalidate our experiment?" Loading animations and smooth transitions rarely meet this bar, while core functionality that enables the behavior you're testing always does.

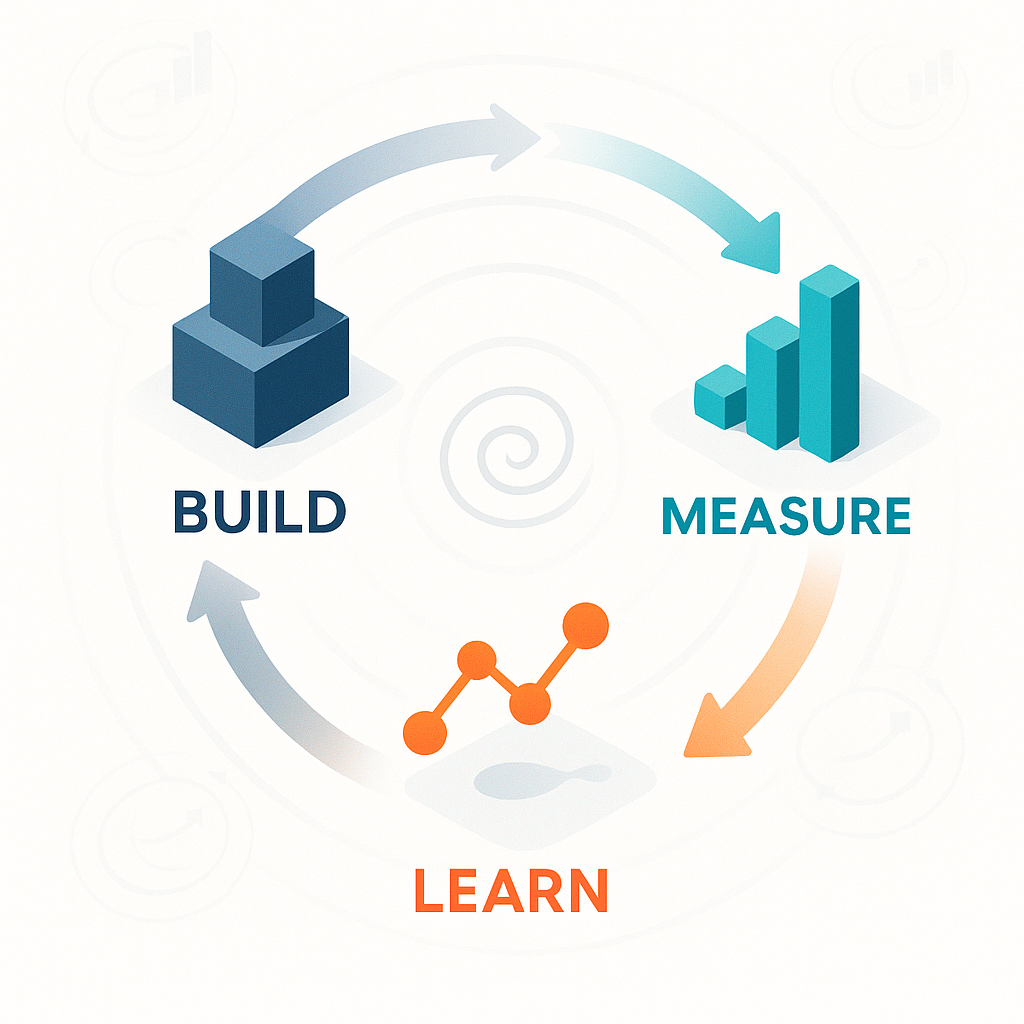

The Build-Measure-Learn loop, popularized by Eric Ries in The Lean Startup, provides a systematic approach to navigating uncertainty through rapid experimentation. Rather than betting everything on a single elaborate solution, you build small experiments, measure their impact precisely, and learn what actually drives user behavior. This iterative cycle transforms product development from a linear waterfall into a responsive feedback system that adapts based on evidence.

The power of this framework lies not in its individual components but in the speed and discipline with which you complete each cycle. A team that takes three months to complete one loop learns far less than a team completing weekly cycles, even if the longer cycle yields more polished output. Speed of learning, not perfection of execution, determines who wins in uncertain markets. Consequently, this requires fundamentally rethinking how you plan work, measure success, and make decisions.

Building within a Build-Measure-Learn loop means accepting that most of what you create is temporary. You're not crafting the final solution but rather the next experiment in a series of investigations. This mindset shift liberates teams from perfectionism while demanding rigor in experimental design. When your engineer asks "Should we build this to scale?" the answer is usually "No, we're building to learn." However, this doesn't mean accepting sloppy code—it means architecting for change rather than permanence, using feature flags liberally, and keeping experiments isolated from core systems.

The Measure phase requires infrastructure and discipline that many teams underestimate. Before building anything, you must instrument tracking for the specific behaviors you're testing. Too often, teams launch experiments then scramble to add analytics, discovering they can't answer their fundamental question because they didn't capture the right events. Effective measurement also means determining your sample size and test duration in advance. If you need 1,000 users and two weeks to achieve statistical significance, extending the test because early results look promising only muddles your learning.

The Learn phase—often the most neglected—transforms raw metrics into actionable insights that inform your next loop. Learning isn't simply observing that retention increased 3% but understanding why and what that implies for your next experiment. Did users with more accountability partners retain better? Did the feature work for runners but not yoga practitioners? These nuanced insights guide whether you double down, pivot, or abandon the current direction.

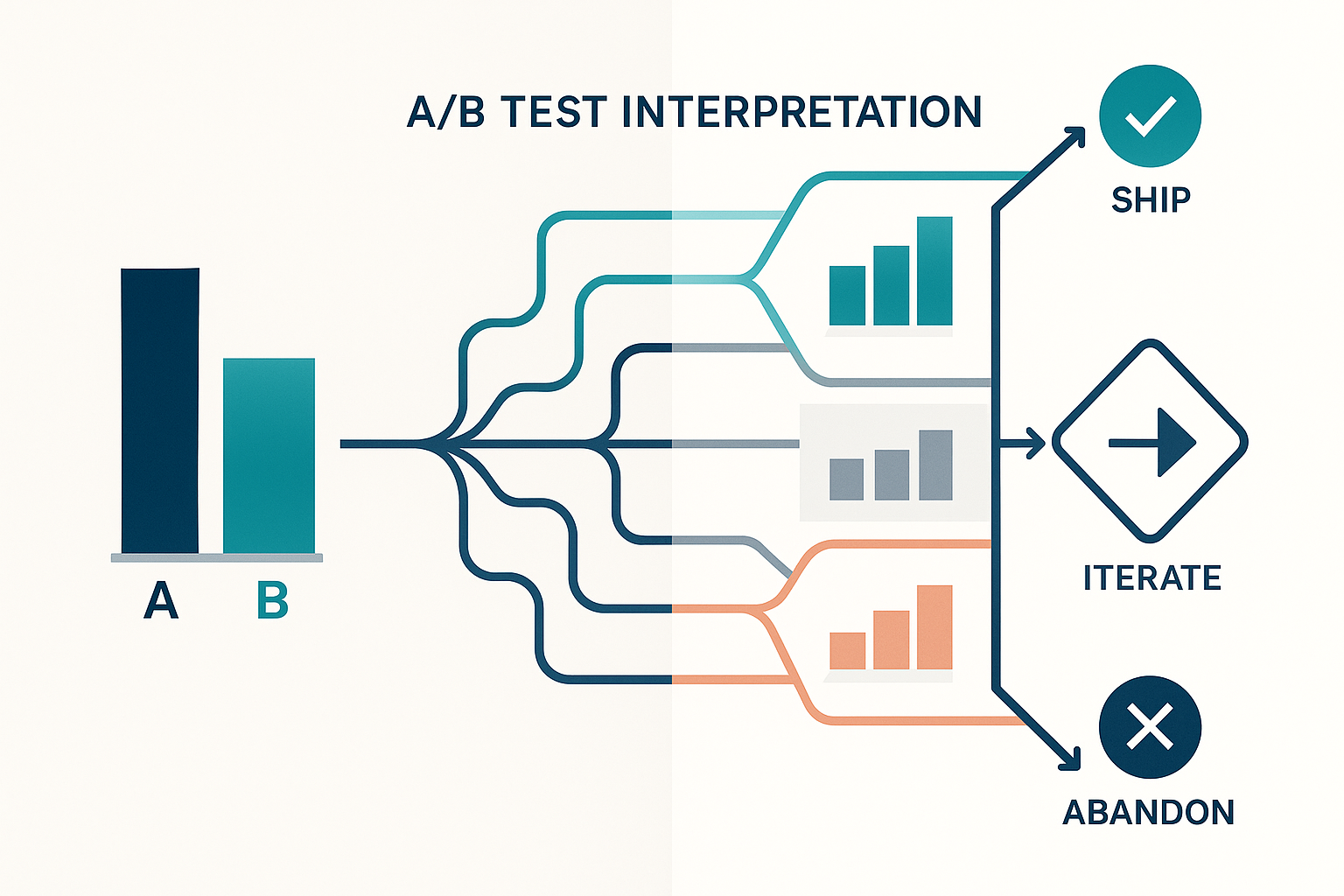

A/B testing provides the gold standard for establishing causation between product changes and user behavior, but interpreting test results requires statistical sophistication and business judgment that goes beyond simply checking if the p-value is below 0.05. As a Product Manager, you must navigate the treacherous waters between statistical significance and practical significance, understand when to declare victory versus when to iterate, and know how to extract maximum learning from ambiguous results.

The first challenge in interpretation is determining whether your results are statistically significant or merely random noise. A 3% lift in retention might seem meaningful, but with a p-value of 0.08, you have an 8% chance this difference is pure chance. However, statistical significance isn't everything—a 0.5% improvement with p=0.01 might be statistically rock-solid but practically worthless if it doesn't move your business metrics meaningfully. You must balance statistical rigor with business impact, sometimes accepting higher uncertainty for potentially transformative changes.

Beyond the headline metrics, the richest insights often hide in segment analysis and secondary metrics. Your overall retention might increase 2%, but diving deeper reveals that power users improved 8% while casual users actually declined 1%. This heterogeneous treatment effect suggests you should roll out to power users while iterating for casual users. Similarly, watching secondary metrics prevents you from optimizing locally while harming the global system. That feature driving 10% more engagement might also be generating 30% more support tickets, making it a net negative for the business.

Moreover, interpreting A/B tests requires understanding the difference between tactical and strategic validation. A tactical test might show that blue buttons outperform green ones by 2%—useful for optimization but not transformative for your product strategy. A strategic test revealing that users with accountability partners retain 40% better fundamentally changes your product direction. You must distinguish between tests that optimize existing mechanisms and tests that validate new directions, allocating your testing bandwidth accordingly.

When facing mixed or ambiguous results, resist the temptation to cherry-pick positive signals or dismiss the test entirely. A test where the treatment group shows 3.2% retention improvement with p=0.08 and 18% engagement increase provides valuable signal despite not reaching traditional significance thresholds. The key is extracting learning about why the feature partially worked and what prevented it from achieving full impact. Perhaps 31% of users never activated the feature, suggesting the problem isn't the feature itself but its discoverability or perceived value.