Welcome to the second lesson of our course on "The MLP Architecture: Activations & Initialization"! We're making excellent progress on our neural network journey. In the previous lesson, we successfully implemented a Multi-Layer Perceptron (MLP) by stacking multiple dense layers, allowing information to flow from input to output through our network.

Today, we'll be exploring an essential component of modern neural networks: the Rectified Linear Unit (ReLU) activation function. While we've been using the sigmoid activation function so far, ReLU has become the default activation function for most hidden layers in deep neural networks due to its computational efficiency and effectiveness in addressing the vanishing gradient problem.

By the end of this lesson, you'll understand what ReLU is, why it's so popular, and how to implement and incorporate it into your neural network architecture. We'll also modify our DenseLayer class to support different activation functions, making our neural network framework more flexible and powerful. Let's dive in!

As we've seen in our previous work, activation functions introduce non-linearity into our neural networks. Without them, no matter how many layers we stack, our network would merely compute a linear transformation of the input data.

Let's quickly recall the sigmoid activation function we've been using:

The sigmoid function maps any input to a value between 0 and 1, creating a smooth S-shaped curve. While it works well for certain tasks, sigmoid has some significant limitations:

- Vanishing gradients: When inputs are very large or very small, the gradient of the

sigmoidfunction becomes extremely small, slowing down learning. We'll be discussing gradients in much more detail in our next course about training neural networks, but for the time being, you can think of the gradient as the fundamental feedback signal that the network uses to adapt its weights and learn. - Computational expense: Computing exponentials is relatively expensive.

- Not zero-centered: The output is always positive, which can cause zig-zagging dynamics during optimization.

These limitations become particularly problematic in deep networks with many layers. This is where alternative activation functions like ReLU come into play, offering solutions to many of these challenges.

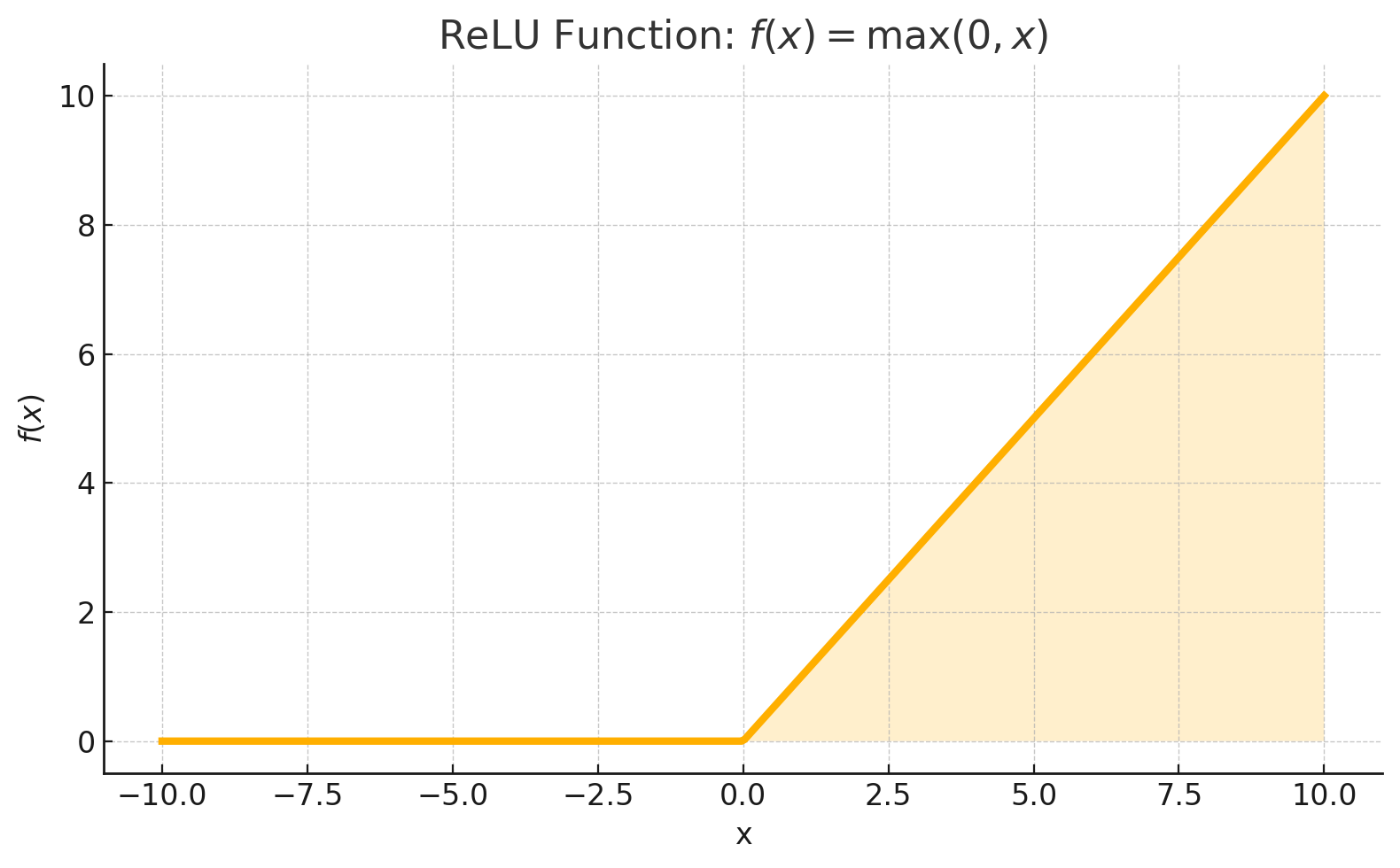

The Rectified Linear Unit (ReLU) is perhaps the simplest non-linear activation function, yet it has revolutionized deep learning. Its mathematical definition is elegantly straightforward:

In plain English: ReLU outputs the input directly if it's positive and outputs zero if the input is negative. This creates a simple "ramp" function that's linear for positive values and flat for negative values.

The advantages of ReLU over sigmoid are numerous and significant:

- Computational efficiency:

ReLUinvolves only a simple max operation, making it much faster to compute than functions involving exponentials. - Reduced vanishing gradient problem: For positive inputs, the gradient is always 1, allowing for much faster learning.

Let's implement the ReLU activation function in JavaScript using mathjs. The implementation is simple, but to make it robust, we'll handle both numbers and arrays/matrices:

This function checks if the input x is a single number or an array/matrix. If it's a number, it simply returns the maximum of 0 and x. If it's an array or matrix, it uses mathjs's map function to apply the same operation element-wise. This ensures our relu function works efficiently for all input types we might encounter in our neural network.

Now that we have both sigmoid and ReLU activation functions, let's modify our DenseLayer class to support different activation functions. This will make our neural network architecture more flexible. Here is the updated implementation:

Key points in this implementation:

- The constructor takes an

activationFnNameparameter (defaulting to'sigmoid'for backward compatibility). - The activation function is selected based on the provided name.

- The

forwardmethod computes the weighted sum, adds the biases, and then applies the selected activation function. - The use of

math.add(weightedSum, this.biases)ensures that biases are added correctly to the weighted sum, leveragingmathjs's broadcasting.

The forward method remains the same, but now it will use whichever activation function was selected during initialization.

With our enhanced DenseLayer class, we can now create an MLP that uses different activation functions for different layers. This is a common practice in deep learning, where ReLU is typically used for hidden layers and sigmoid (or other functions) for the output layer, depending on the task.

Let's create an MLP with ReLU for the first layer and sigmoid for the subsequent layers:

This creates a 3-layer MLP with:

- A first layer using

ReLUactivation with 5 neurons - A second layer using

sigmoidactivation with 3 neurons - An output layer using

sigmoidactivation with 1 neuron

Let's see what happens when we forward propagate our input through this network with mixed activations:

This gives us:

Our MLP with mixed activations is working! But to really understand how ReLU affects our network, let's try an input with mostly negative values:

The result reveals a fascinating aspect of ReLU:

Look at the output of the first layer! It's all zeros. This illustrates a key property of ReLU: it completely blocks negative inputs, resulting in a sparse activation pattern. In this case, all of our input values resulted in negative weighted sums in the first layer, so ReLU converted them all to zeros.

Despite this extreme first-layer output, our network still produced a non-zero final output. Why? Let's clarify with a concrete example:

Great work! You've now learned about the ReLU activation function, its advantages over sigmoid, and how to implement and use it in your neural network framework. You've also seen how to build MLPs with mixed activation functions and observed the unique behavior of ReLU in practice.

Up next, you'll get hands-on experience with a practice section focused on ReLU, where you'll solidify your understanding by applying what you've learned. After that, we'll move on to discuss activation functions specifically designed for output layers, such as linear and softmax activations, and see how they are used for different types of prediction tasks. Your neural network toolkit is expanding, and you're well on your way to building more flexible and powerful models!