Welcome to Putting Bedrock Models to Action with Strands Agents! Having mastered the fundamentals of foundation models and data management in your previous courses, you're now ready to take the next exciting step: building intelligent, autonomous agents that can think, reason, and take meaningful actions in the real world.

This comprehensive course will transform your understanding from working with static model interactions to creating dynamic, goal-oriented AI systems. You'll master the Strands framework, an innovative Python library that orchestrates complex agent behaviors through sophisticated message processing and tool integration. Throughout this journey, we'll explore four essential units: building your first Bedrock-powered agent, integrating intelligent tools for real-world actions, connecting agents to knowledge bases for informed decision-making, and implementing advanced Model Context Protocol features for seamless multi-system interactions.

In this opening lesson, we'll establish the foundation by creating your first intelligent agent using the Strands framework. You'll configure a Bedrock model with proper guardrails, define an agent's personality through system prompts, and witness how autonomous AI systems can engage in meaningful conversations. By the end of this lesson, you'll have a fully functional AWS Technical Assistant agent that demonstrates the core principles of agentic AI behavior.

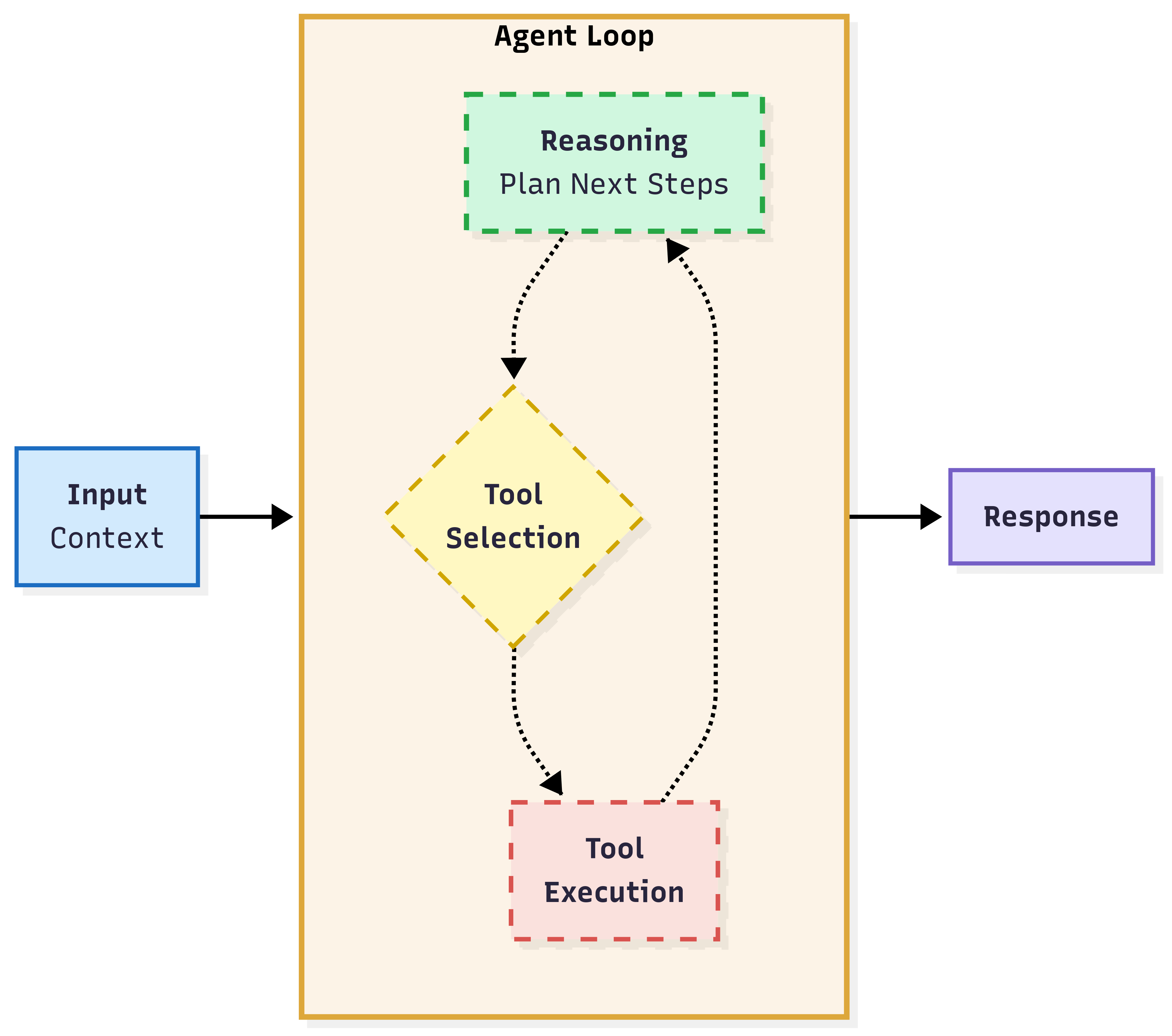

Before diving into implementation, let’s make the idea of Agentic AI concrete and relatable. In simple terms, we can say that an AI Agent is a LLM in a loop: that is, an AI system that repeatedly reasons about your goal, decides the next step, takes actions with tools, observes results, and continues until the goal is reached. Instead of a single prompt-in/answer-out exchange, it maintains persistent context across steps and sessions, adapting as new information appears. The main steps involve:

- Thinking: analyze the request and plan the next step;

- Deciding: choose to call a tool or ask a clarifying question;

- Acting: invoke tools/APIs (search, knowledge bases, AWS SDKs, internal services);

- Observing: read tool results and update context/memory;

- Iterating: repeat until a stopping condition (goal met, human approval, or guardrail block).

This think-decide-act-observe loop gives agents autonomy: they can decompose problems, use tools to take real actions, and incorporate guardrails, policies, and feedback into their decisions. The Strands Agents framework structures this loop and manages state so you focus on capabilities, not plumbing.

Agentic AI systems differ from common non-agentic patterns that are effective in narrower situations. Understanding the alternatives clarifies where each approach fits.

- Rule-based chatbots: predefined flows for predictable tasks (e.g., reset password). Simple and reliable, but no reasoning or tool-driven autonomy.

- Task-specific ML/pipelines: classifiers or extractors that do one step well (e.g., sentiment, entity extraction). Accurate and efficient, but not conversational or goal-directed.

- Single-turn LLM calls: prompt in, answer out for Q&A, summarization, or rewrites. No memory, no multi-step planning, no tool use.

Final takeaway: use an agent when success requires multi-step decisions, tool usage, changing strategies, and measurable progress toward a goal. Choose the simpler alternatives for fixed, deterministic flows or one-off transformations; Strands lets agents handle the rest by orchestrating the loop, preserving context, and enforcing guardrails.

The Strands Agent framework provides an elegant Python library specifically designed for building these autonomous AI systems with elegant simplicity and powerful capabilities. At its core, Strands orchestrates the complex dance between human input, AI reasoning, tool execution, and response generation through what we call the agent loop:

The agent loop represents the heartbeat of intelligent behavior: when you send a message to a Strands agent, it enters a cycle of reasoning about your input, determining whether tools are needed to fulfill your request, executing those tools when necessary, and integrating the results back into its reasoning process. This cycle can repeat multiple times within a single interaction, allowing agents to perform complex, multi-step tasks autonomously while maintaining complete transparency about their decision-making process.

Message processing within Strands follows a structured approach in which user messages, assistant responses, and tool results all flow through the system in a standardized format. The framework automatically handles the intricate details of message formatting, state management, and context preservation, allowing you to focus on defining your agent's capabilities rather than managing the underlying mechanics of conversation flow and tool orchestration.

Let's begin building your first Strands agent by establishing the necessary imports and configuration constants that will power our AWS Technical Assistant.

Our imports bring in the essential Strands components: the Agent class that orchestrates intelligent behavior and the BedrockModel class that provides seamless integration with Amazon Bedrock's foundation models. The MODEL_ID specifies Claude Sonnet 4, Anthropic's latest and most capable model, which excels at reasoning, analysis, and technical explanations, making it ideal for our AWS Technical Assistant use case.

The guardrail configuration leverages environment variables for secure, flexible deployment: GUARDRAIL_ID references your specific Bedrock guardrail configuration, while GUARDRAIL_VERSION defaults to "DRAFT" if not explicitly set, allowing for easy testing and development workflows without hardcoding sensitive configuration details directly in your source code.

With our environment prepared, we now create the foundational model that will power our agent's intelligence and ensure safe, compliant interactions through integrated guardrails.

The BedrockModel instantiation establishes a secure connection to Amazon Bedrock while embedding safety measures directly into the model's behavior. By specifying the guardrail_id and guardrail_version, we ensure that all model responses automatically undergo content filtering, topic restrictions, and compliance checking as defined in your Bedrock guardrail configuration. This approach provides defense-in-depth security, where inappropriate content is blocked at the model level before reaching your application or end users.

This configuration creates a production-ready model instance that balances powerful AI capabilities with responsible AI practices, ensuring that your agent can provide helpful, accurate assistance while maintaining appropriate boundaries and safety standards throughout all interactions.

Every effective agent needs a clear sense of purpose and personality, which we establish through a carefully crafted system prompt that guides the model's behavior and response style.

The system prompt serves as your agent's fundamental personality and expertise definition, establishing both its identity as an AWS Technical Assistant and its behavioral guidelines for providing clear, accurate information. This seemingly simple statement carries significant weight in shaping how the model interprets queries, structures responses, and maintains consistency across interactions.

Well-crafted system prompts like this one establish professional expertise while promoting helpful, informative communication patterns. The emphasis on clarity and accuracy ensures that technical explanations remain accessible to users with varying levels of AWS experience, while the focus on AWS services creates appropriate boundaries around the agent's area of specialization.

Now we combine our configured model and system prompt to instantiate a fully functional Strands agent capable of autonomous reasoning and intelligent conversation.

This Agent instantiation represents the culmination of our setup process, binding together the secure Bedrock model with the defined personality and expertise. The Strands framework now handles all the complex orchestration between your interactions and the underlying model, including message formatting, context management, and the agent loop we discussed earlier.

Behind this simple instantiation lies sophisticated functionality: the agent automatically manages conversation history, processes your inputs through the reasoning cycle, and maintains consistent behavior aligned with your system prompt. This abstraction allows you to focus on meaningful interactions rather than the technical details of model communication and state management.

With our agent fully configured, we can now experience the power of agentic AI by sending our first query and witnessing intelligent, autonomous response generation.

This simple function call initiates the complete agent loop: your query flows through the reasoning process, the model generates a comprehensive response based on its AWS expertise, and the result demonstrates the agent's ability to provide educational, well-structured explanations tailored to the specified audience level.

The response showcases exactly what makes agentic AI so powerful: rather than providing a brief, generic answer, your AWS Technical Assistant autonomously structured a comprehensive beginner's guide complete with clear sections, practical examples, and actionable next steps. The agent understood the context of "beginner" and adapted its communication style accordingly, providing detailed explanations, visual formatting, and real-world applications that make complex technical concepts accessible and engaging.

Congratulations on successfully building your first intelligent agent with the Strands framework! You've witnessed firsthand how a few lines of configuration can create a sophisticated AI system capable of autonomous reasoning, structured thinking, and expert-level communication. Your AWS Technical Assistant demonstrates the fundamental principles of agentic AI: understanding context, maintaining personality consistency, and providing value-driven responses that go far beyond simple question-answering.

This foundational experience has prepared you for the exciting challenges ahead, where we'll expand your agent's capabilities with tool integration, knowledge base connections, and advanced protocol features. In the upcoming practice section, you'll have the opportunity to experiment with different system prompts, explore various Bedrock models, and gain hands-on experience with the complete agent development workflow that will serve as your launching pad for building truly remarkable AI applications.