Welcome to our exploration of "Understanding Activation Functions". In this lesson, we will investigate activation functions — essential components in neural networks that determine the output of a neuron. We will focus on the theory and C++ implementations of five activation functions. Let's begin our journey into the world of neural networks using C++.

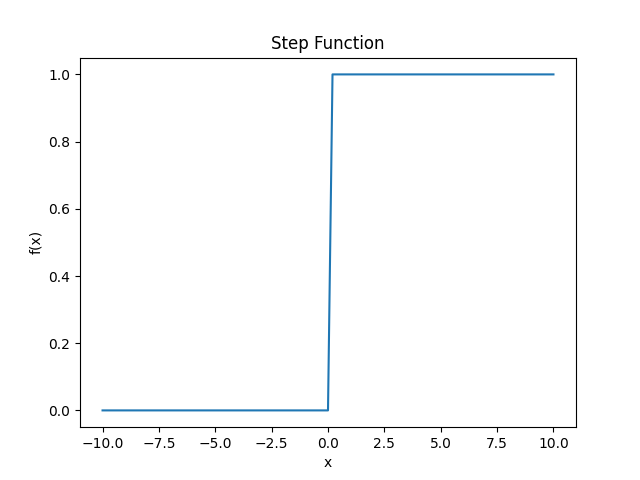

Activation functions play a crucial role in neural networks by determining the output of each neuron. You can think of them as gates: they decide whether a neuron should be activated or not, based on the input. In this lesson, we will explore five types of activation functions.

Mathematical Formula:

Mathematical Formula:

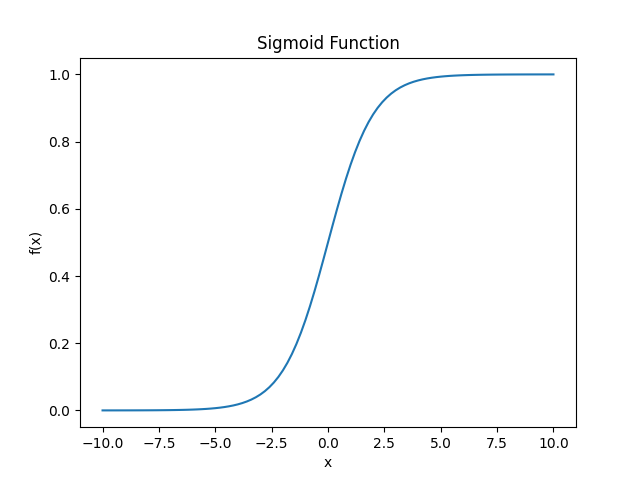

The sigmoid function maps any real value to a value between 0 and 1, producing an S-shaped curve. It is often used when the output needs to represent a probability.

Mathematical Formula:

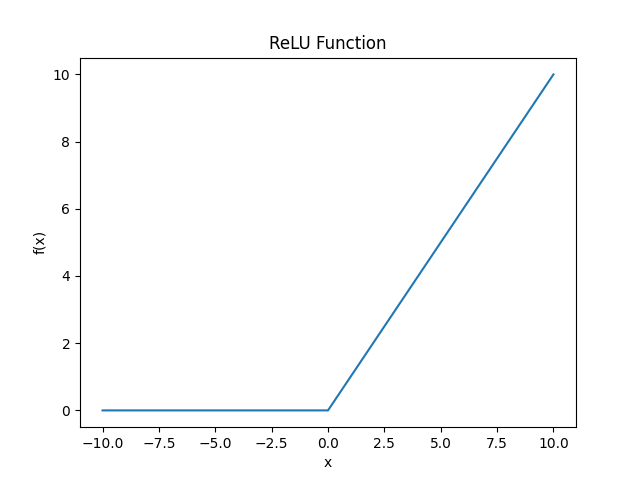

The ReLU (Rectified Linear Unit) function returns the input value itself if it is positive; otherwise, it returns zero. It is widely used in neural networks due to its simplicity and effectiveness.

C++ Implementation:

ReLU Function Visualization

You can visualize the ReLU function in C++ using matplotlibcpp as before:

Mathematical Formula:

Mathematical Formula:

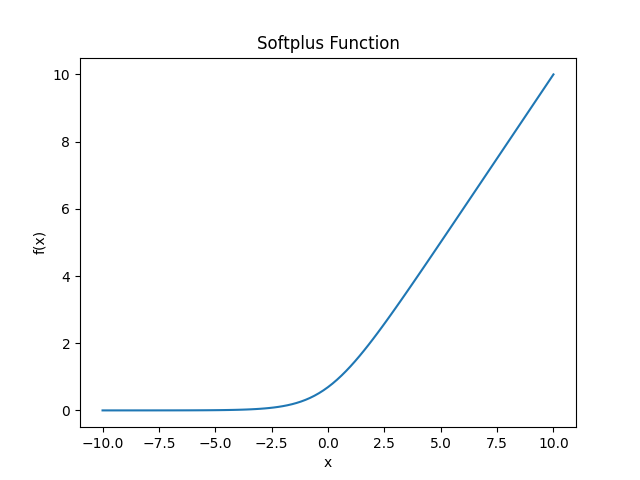

The softplus function is a smooth approximation of the ReLU function and is differentiable everywhere. It is defined as the natural logarithm of (1 + exp(x)).

C++ Implementation:

Softplus Function Visualization

You can visualize the Softplus function in C++ using matplotlibcpp as before:

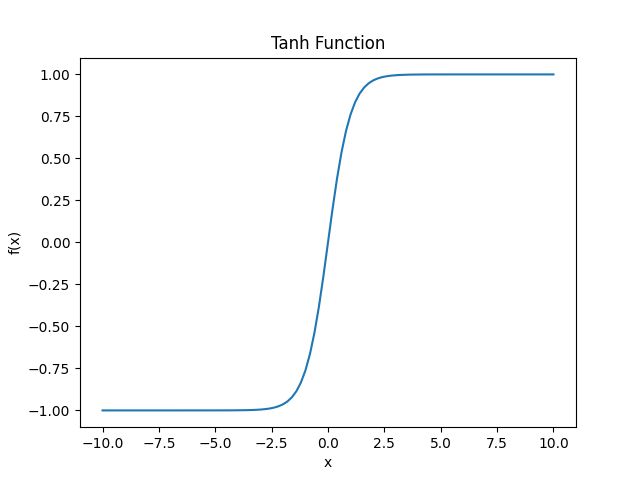

In this lesson, we explored the theory and C++ implementations of several important activation functions: step, sigmoid, ReLU, tanh, and softplus. You have learned how to implement these functions, their mathematical formulas, and how to visualize them using C++ and the matplotlibcpp library.

By mastering these activation functions, you are building a strong foundation for understanding and constructing neural networks in C++.