Welcome to the first step in your journey to understanding drawing recognition using Convolutional Neural Networks (CNNs). In this lesson, we will explore the problem of drawing recognition, why it is important, and how CNNs can help solve it. This will set the stage for building and training your own CNN models in the upcoming lessons.

Before proceeding, it's crucial to understand the final goal of this course path.

By the end of this course path, you will have the skills and knowledge to create an AI model capable of recognizing user-drawn sketches from a user interface. This involves building and training a Convolutional Neural Network (CNN) that can accurately identify and classify hand-drawn images. You will learn how to preprocess data, design and implement CNN architectures, and evaluate model performance. This course will empower you to develop applications that can interpret and understand drawings, paving the way for innovative solutions in fields like digital art, education, and interactive design.

As for this course, we will focus on a simple CNN model that can recognize hand-drawn digits. Let's move on to the next section to understand the problem of drawing recognition in more detail.

Drawing recognition is a fascinating and challenging problem in the field of computer vision. It involves teaching machines to understand and interpret hand-drawn sketches, which can vary significantly in style, size, and quality. This task is not only about recognizing shapes but also about understanding the context and meaning behind the drawings. This is a complex problem because human drawings can be highly variable. For example, the same digit can be drawn in many different ways, and the quality of the drawing can vary from person to person. Additionally, drawings may contain noise or artifacts that can make recognition difficult. To tackle this problem, we will use Convolutional Neural Networks (CNNs), which are a type of deep learning model specifically designed for image processing tasks. CNNs are capable of automatically learning and extracting features from images, making them well-suited for recognizing patterns in hand-drawn sketches. CNNs work by applying a series of convolutional filters to the input images, allowing them to learn hierarchical representations of the data. This means that the model can learn to recognize simple shapes in the early layers and more complex patterns in the deeper layers. By training on a large dataset of labeled images, CNNs can achieve high accuracy in recognizing and classifying hand-drawn sketches.

Convolutional Neural Networks (CNNs) are a powerful tool for image recognition tasks, including drawing recognition. They are designed to automatically learn and extract features from images, making them particularly effective for recognizing patterns in visual data. CNNs consist of multiple layers, including convolutional layers, pooling layers, and fully connected layers, which work together to process and analyze images.

Drawing recognition is the process of identifying and classifying hand-drawn sketches. This is a crucial task in various applications, such as digitizing handwritten notes, enabling sketch-based search engines, and even in the development of AI-driven art tools. In this lesson, we will delve into the basics of this problem and introduce you to the MNIST dataset, a popular dataset for training image processing systems.

The MNIST dataset consists of 70,000 images of handwritten digits, each labeled with the corresponding number. Here’s how you can load and preprocess a smaller sample of this dataset using Python:

This code snippet demonstrates how to load the MNIST dataset, select a subset for training and testing, reshape the images, and normalize the pixel values to be between 0 and 1—a common preprocessing step in image recognition tasks.

When working with the MNIST dataset, each image is originally a 28x28 grid of pixels. Reshaping the data to (28, 28, 1) ensures that each image has a consistent shape and includes a channel dimension, which is required by most deep learning frameworks for grayscale images. This allows the CNN to process the images correctly.

Normalization, which scales the pixel values to be between 0 and 1, is also important. Neural networks train more efficiently and converge faster when input values are within a small, consistent range. Normalization helps prevent issues with large gradients and makes the training process more stable and effective.

The to_categorical function is used to convert integer class labels into one-hot encoded vectors. In the context of the MNIST dataset, each label is originally an integer from 0 to 9, representing the digit in the image. One-hot encoding transforms these integer labels into binary vectors, where each vector has the same length as the number of classes (in this case, 10). In a one-hot encoded vector, all elements are 0 except for the index corresponding to the class label, which is set to 1. For example, the label 3 becomes . This format is commonly used in machine learning because it allows the model to output probabilities for each class and makes it easier to compute loss and accuracy during training.

After loading the data, it’s important to explore its structure and contents. You can print out the shapes of the data arrays, check the unique labels, and examine the range of pixel values:

Example Output:

This output provides insights into the structure of the dataset, including the number of samples, the shape of the images, the unique labels present, and the normalization of pixel values.

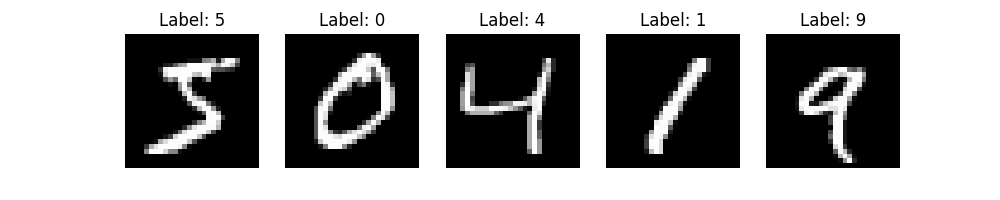

Visualizing some of the images helps you understand what the data looks like and what your model will be learning from. Here’s a simple snippet to display a few sample images from the training set:

As a result, you'll see a grid of images with their corresponding labels, giving you a visual representation of the dataset.

Understanding the problem of drawing recognition is essential because it forms the foundation for many real-world applications. By learning how to recognize and classify drawings, you can contribute to advancements in technology that make our lives easier and more efficient. CNNs are particularly well-suited for this task due to their ability to automatically learn and extract features from images, making them a powerful tool in the field of computer vision.

Are you ready to dive deeper into the world of drawing recognition? Let's move on to the practice section and start exploring the MNIST dataset in more detail.