Even with a structured process, subtle judgment errors can creep in during candidate evaluation. The real challenge is noticing when your decision-making might be swayed by factors that aren’t relevant to the job, or by how you weigh the evidence. By focusing on clear criteria and what you actually observe, rather than first impressions or outside opinions, you help ensure every candidate is assessed on their true fit for the role.

In this lesson, you’ll learn how to:

- Spot common evaluation pitfalls that can undermine fair decision-making.

- Use practical techniques to stay fair and consistent, even when signals are mixed.

- Handle ambiguous situations by sticking to evidence and the rubric.

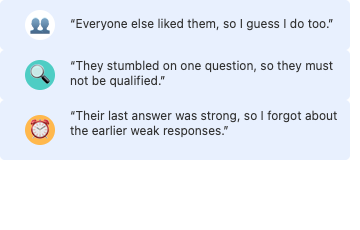

Staying fair starts with knowing where your judgment can go off track. Here are some classic traps to watch for:

- Groupthink: Going along with the majority instead of your own notes.

- Overvaluing a Single Data Point: Letting one moment outweigh the rest.

- Recency Bias: Letting the last answer overshadow earlier evidence.

Recognizing these patterns in yourself is the first step to making better, more consistent decisions.

The best way to avoid these pitfalls is to follow a disciplined evaluation process—stick to the rubric and base your assessment on what you actually observed. Instead of “I just liked their attitude,” try:

“Described mediating a team conflict by facilitating a structured discussion, which matches our collaboration criterion.”

To stay consistent and minimize bias, make it a habit to write your evaluation independently before reading others’ feedback, review the full range of responses (not just the most memorable or recent), and always refer back to your notes and the rubric. If you’re unsure, check your notes or ask clarifying questions — don’t guess. The goal is to ground your evaluation in specific, observable evidence every time.

Here’s a real-world example of avoiding groupthink in a candidate debrief:

- Ryan: What did you think of the candidate? It seemed like most people were really positive.

- Natalie: I noticed that too, but I want to stick to my notes. For example, when I asked about a time they handled a production outage, they didn’t really explain their process. Just said they “worked hard to fix it.”

- Ryan: That’s a good point. Did you see any evidence that matched our problem-solving rubric?

- Natalie: Not really. I was hoping for a step-by-step breakdown, but it wasn’t there. I think it’s important we highlight that, even if the group felt positive overall.

- Ryan: Agreed. Let’s make sure we capture that in the evaluation.

Natalie resists groupthink by focusing on independent, evidence-based notes. Ryan reinforces the importance of sticking to the rubric and concrete examples.

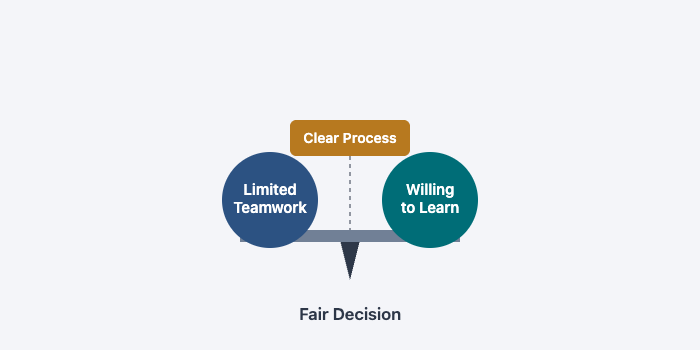

Ambiguous or conflicting signals are common, but fairness comes from sticking to what you actually observed* When you’re faced with uncertainty, try using evidence triangulation: look for multiple examples or patterns across the interview, rather than letting a single moment sway your decision.

Here’s how to apply evidence triangulation:

- Gather multiple data points: Look for several examples or behaviors related to the same skill or criterion throughout the interview.

- Compare and contrast: Note both strengths and gaps. For example, “Candidate hesitated before answering, but ultimately described a clear process for handling outages.”

- Look for patterns: Ask yourself if there’s a consistent trend (e.g., repeated willingness to learn, or recurring gaps in technical depth) rather than relying on a single strong or weak moment.

- Balance your judgment: Weigh the overall evidence, not just the most recent or memorable response.

- Acknowledge uncertainty: If signals are mixed, it’s okay to note this in your evaluation—as long as you apply the same standards and rubric anchors for every candidate.

By gathering and weighing several pieces of evidence, you get a fuller, more accurate picture of the candidate’s abilities. This approach helps ensure your decisions are fair, consistent, and based on the overall pattern of evidence rather than isolated moments.

You’ve seen how to spot evaluation pitfalls, stay anchored in what you observe, and handle ambiguity fairly. In the next practice, you’ll get hands-on experience navigating real-world scenarios and reinforcing your ability to avoid common evaluation traps. These are the skills that will help you become a trusted, reliable interviewer.