Evaluating sales candidates can be challenging, especially when multiple interviewers are involved or when responses are open to interpretation. Without a structured approach, it’s easy for bias or gut instinct to creep in, leading to inconsistent or unfair decisions. Structured evaluation frameworks help you focus on job-related evidence, making your recommendations easier to justify and your hiring process more defensible. By using clear, agreed-upon criteria, you ensure that every candidate is assessed on the same standards, regardless of who is conducting the interview.

A Behaviorally Anchored Rating Scale (BARS) is a tool that defines what high, medium, and low performance look like for each competency, using real examples of on-the-job behavior. Instead of relying on subjective impressions, you compare a candidate’s response to these concrete benchmarks. For example, when evaluating “resilience,” a high rating might be anchored to a story like "After losing a big client, I doubled my outreach and closed two new accounts within a month", while a low rating might be "If a client objects, I just move on to the next one". BARS help you stay objective, reduce bias, and ensure that every interviewer is using the same standards. Anchoring your ratings to the actual requirements of the role, such as how a mid-level Account Executive handles client objections, ensures your evaluation is relevant and fair.

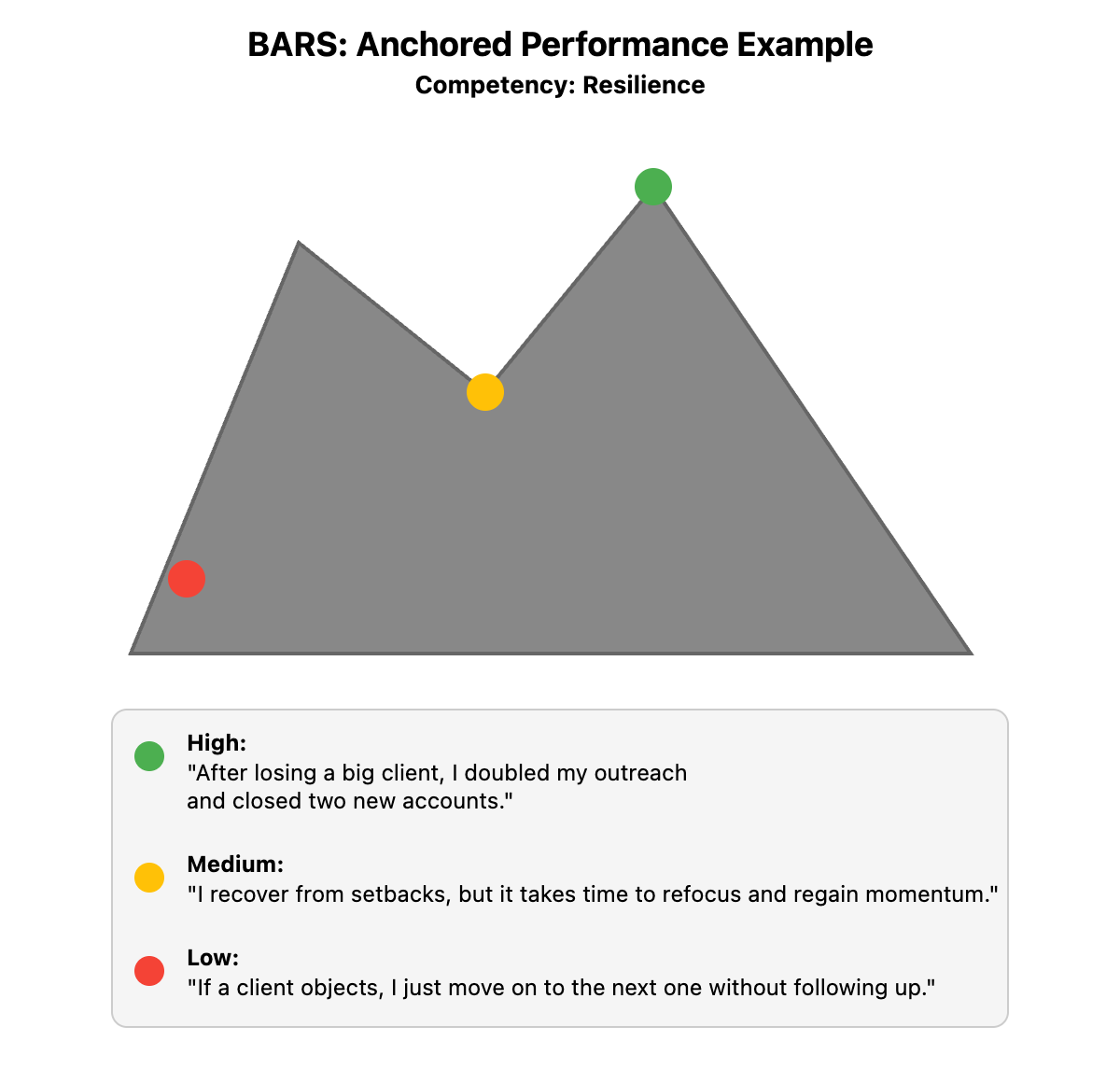

To help visualize this, the BARS framework is represented in the image below using a mountain metaphor. Imagine each level of the mountain as a different level of performance: the base represents low competency, the middle shows average performance, and the peak illustrates high competency. Each stage is anchored by specific, real-world behaviors that make it clear what it takes to move up the mountain. This visual can help interviewers quickly grasp how to rate responses and reinforces the idea that reaching the top requires concrete, demonstrated skills.

Consistency across interviewers is crucial for fair hiring. Shared behavioral anchors—agreed-upon descriptions of what “good” and “bad” look like—ensure everyone rates candidates the same way, even if their personal styles differ. For example, if your team agrees that a “5” for ownership means the candidate proactively solved a problem without being asked, then everyone uses that same standard. When in doubt, refer back to the shared anchors and ask, “Did the candidate’s example match the behaviors we agreed define success in this role?”

Here’s how this looks in practice:

- Chris: I noticed you rated the candidate’s objection-handling as a 5, but I gave it a 3. Can we talk through our reasoning?

- Natalie: Sure! I thought her story was strong, but when I checked our anchor for a 5, it says the candidate should have turned a “no” into a “yes” with a creative solution. She described listening to the client, but didn’t actually win them back.

- Chris: That’s a good point. I liked her approach, but she didn’t actually close the deal. The anchor for a 3 is “shows effort but lacks a clear result,” which fits better.

- Natalie: Exactly. Using the anchors helps us stay consistent, even if we have different first impressions.

In this exchange, both interviewers use the shared behavioral anchors to calibrate their ratings, ensuring their evaluation is based on agreed-upon standards rather than personal preference. This habit makes your evaluations more reliable and easier to explain, and it’s a key part of building a fair, effective interview process.

Once you’re comfortable with these structured evaluation techniques, you’ll be ready to practice them in a realistic scenario. In the upcoming role-play, you’ll have the chance to explain the value of shared behavioral anchors to a new interviewer and see how this approach leads to more consistent and fair hiring decisions.