Welcome back to lesson 2 of "Building and Applying Your Neural Network Library"! You've made excellent progress in this course. In our previous lesson, we successfully transformed our neural network code into a well-structured Python package by modularizing our core components — dense layers and activation functions. We created clean import paths and established the foundation for a professional-grade neural network library.

Now we're ready to take the next crucial step: modularizing our training components. As you may recall from our previous courses, training a neural network involves two key components beyond the layers themselves: loss functions (which measure how well our network is performing) and optimizers (which update the network's weights based on the gradients we compute). Currently, these components are scattered throughout our training scripts, making them difficult to reuse and maintain.

In this lesson, we'll organize these training components into dedicated modules within our neuralnets package. We'll create a losses submodule to house our Mean Squared Error (MSE) loss function and an optimizers submodule for our Stochastic Gradient Descent (SGD) optimizer. By the end of this lesson, you'll have a complete, modular training pipeline that demonstrates the power of good software architecture in machine learning projects.

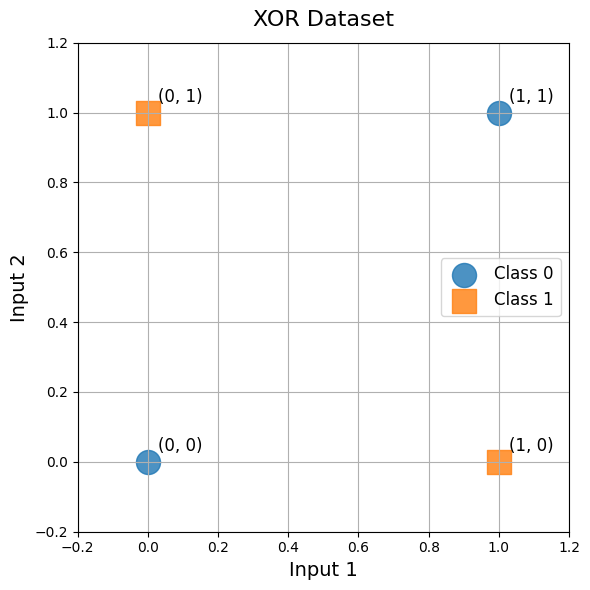

Before we dive into modularizing our training components, let's take a moment to understand the dataset we'll be using to test our library: the XOR (exclusive OR) problem. This is a classic toy problem in machine learning that serves as an excellent test case for neural networks because it's non-linearly separable — meaning a single linear classifier cannot solve it, but a simple multi-layer neural network can.

The XOR problem consists of four data points with two binary inputs and one binary output. The output is 1 when exactly one of the inputs is 1, and 0 otherwise. This creates the pattern: [0,0] → 0, [0,1] → 1, [1,0] → 1, [1,1] → 0. This is tipically treated as a classification problem, but we can frame it as a regression task as well, which is what we'll do by using our mse loss. Despite its simplicity, if our network can learn XOR then we know our forward pass, backward pass, loss calculation, and optimization code are all functioning properly.

While we're using XOR for rapid development and testing in this lesson, later in the course we'll apply our complete neural network library to a real-world dataset — the California Housing dataset — where we'll predict house prices based on various features like location, population, and median income.

Let's start by organizing our loss functions into a dedicated module. We'll create a losses submodule within our neuralnets package, with the familiar MSE functions organized into clean, importable modules. The key insight here is separating the mathematical operations from the training logic, creating a clean interface that makes our code more testable and allows us to easily add other loss functions in the future.

neuralnets/losses/__init__.py:

neuralnets/losses/mse.py:

Similarly, let's organize our optimization algorithm into its own module. We'll implement our SGD optimizer as a class that encapsulates both the learning rate parameter and the update logic.

The key benefit of this modular approach is that we encapsulate the optimization logic in a reusable class with a clean interface. The update method applies the SGD update rule to any layer that has trainable parameters, making our optimizer robust and flexible.

neuralnets/optimizers/__init__.py:

neuralnets/optimizers/sgd.py:

Now comes the exciting part — putting all our modular components together into a complete training pipeline! This demonstrates the power of our modular design: clean imports, specific component usage, and a readable training setup that clearly separates concerns.

Our training pipeline combines the MLP class from our previous lesson with our newly modularized loss functions and optimizers. Notice how each component has a specific responsibility: the network handles forward and backward propagation, the loss module computes objectives and gradients, and the optimizer updates weights.

neuralnets/main.py:

When we run our complete training pipeline, we can observe how our modular neural network library learns to solve the XOR problem. The training output demonstrates both the learning process and the final performance of our network:

The output shows excellent convergence — our loss decreases steadily from 0.25 to just 0.0014 over 1000 epochs. More importantly, the final predictions demonstrate that our network has successfully learned the XOR logic: it outputs values close to 0 for inputs [0,0] and [1,1], and values close to 1 for inputs [0,1] and [1,0]. When rounded, these predictions perfectly match the expected XOR outputs, confirming that our modular library components work together seamlessly.

Excellent work! We've successfully completed the next major step in building our neural network library by modularizing our training components. We've organized our loss functions and optimizers into dedicated modules, creating a clean separation of concerns that makes our code more maintainable, testable, and extensible. The successful training on the XOR problem validates that our modular components work together seamlessly, setting us up perfectly for the next phase of development.

Looking ahead, we have two exciting milestones remaining in our library-building journey. In our next lesson, we'll create a high-level Model orchestrator class that will provide an even cleaner interface for defining, training, and evaluating neural networks. After that, we'll put our completed library to the test on the California Housing dataset, demonstrating its capabilities on real-world regression problems. But first, it's time to get hands-on! The upcoming practice section will give you the opportunity to extend and experiment with the modular components we've built, reinforcing your understanding through practical application.