Welcome to lesson 2 of "Building and Applying Your Neural Network Library"! In the previous lesson, you took your first steps toward a professional-grade neural network library by modularizing your core components — dense layers and activation functions — using a modern JavaScript project structure and ES modules. You learned how to organize your codebase for maintainability and extensibility, setting the stage for a robust machine learning framework.

Now, it's time to take the next big step: modularizing your training components. In neural network training, two essential elements beyond the layers themselves are loss functions (which measure how well the network is performing) and optimizers (which update the network's weights based on computed gradients). If these components are scattered throughout your code, it becomes difficult to reuse, test, or extend them.

In this lesson, you'll organize these training components into dedicated modules within your neuralnets project. You'll create a losses submodule to house your Mean Squared Error (MSE) loss function and an optimizers submodule for your Stochastic Gradient Descent (SGD) optimizer. By the end, you'll have a fully modular training pipeline that demonstrates the power of good software architecture in JavaScript-based machine learning projects.

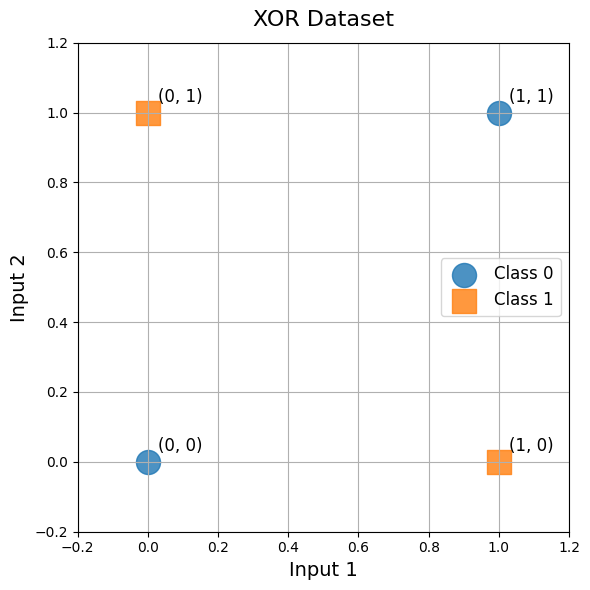

Before we dive into modularizing our training components, let's look at the dataset we'll use to test our library: the XOR (exclusive OR) problem. This is a classic example in machine learning, perfect for testing neural networks because it cannot be solved by a simple linear model — it requires a network with at least one hidden layer.

The XOR problem consists of four data points, each with two binary inputs and one binary output. The output is 1 when exactly one of the inputs is 1 and 0 otherwise. The pattern looks like this: [0,0] → 0, [0,1] → 1, [1,0] → 1, [1,1] → 0. While XOR is often treated as a classification problem, we'll frame it as a regression task by using the mean squared error loss.

Here's how you can set up the XOR dataset in JavaScript using mathjs:

We'll use this simple dataset for rapid development and testing. Later in the course, you'll apply your complete neural network library to a real-world dataset, such as the California Housing dataset, to predict house prices based on features like location, population, and median income.

To keep your codebase clean and extensible, it's important to separate your loss functions into their own module. This allows you to easily add new loss functions in the future and keeps your training logic focused and testable.

Let's create a losses subfolder in your neuralnets project and implement the Mean Squared Error (MSE) loss and its derivative in mse.js:

neuralnets/losses/mse.js

Now, create an index.js file in the losses folder to re-export these functions for easy import elsewhere in your project:

neuralnets/losses/index.js

With this setup, you can import your loss functions anywhere in your project like this:

Just like with loss functions, it's best practice to encapsulate your optimization algorithms in their own module. Here, you'll implement a simple Stochastic Gradient Descent (SGD) optimizer as a class, which will handle updating the weights and biases of your layers.

Create a new file for your optimizer:

neuralnets/optimizers/sgd.js

And create an index.js file in the optimizers folder to re-export your optimizer:

neuralnets/optimizers/index.js

Now, you can import your optimizer class anywhere in your project:

Now comes the exciting part — putting all our modular components together into a complete training pipeline! This demonstrates the power of our modular design: clean imports, specific component usage, and a readable training setup that clearly separates concerns.

Our training pipeline combines the MLP class from our previous lesson with our newly modularized loss functions and optimizers. Notice how each component has a specific responsibility: the network handles forward and backward propagation, the loss module computes objectives and gradients, and the optimizer updates weights.

neuralnets/main.js

When you run this script, you should see output similar to:

Great job! You've now modularized your training components by organizing loss functions and optimizers into dedicated JavaScript modules. This clean separation of concerns makes your codebase more maintainable, testable, and extensible. Training your network on the XOR problem validates that your modular components work together seamlessly.

In the next lesson, you'll take your library to the next level by building a high-level Model orchestrator class, providing an even cleaner interface for defining, training, and evaluating neural networks. After that, you'll put your completed library to the test on a real-world dataset. For now, take some time to experiment with your modular components and reinforce your understanding through hands-on practice. Happy coding!