Welcome to this lesson on editing messages in LLM conversations. In previous lessons, you learned how LLMs generate text, how different model versions work, and how context windows shape responses. You also saw how guiding the model step by step can improve reasoning.

Now, let's focus on a practical technique: editing previous messages to refine the model's context and improve results. This skill is handy when the model's response isn't quite what you wanted or when you want to guide the conversation in a more specific direction. By the end of this lesson, you'll know how to recognize when an edit is needed and how to make changes that help the LLM give better answers.

As a quick reminder, LLMs do not just look at your latest message — they process the entire conversation history every time you submit a new message. This means that any changes you make to earlier messages or responses will affect how the model interprets the conversation and what it generates next.

For example, if you ask a question and then edit your question or the model's answer, the LLM will use the updated version as its context for future replies. This is a powerful way to steer the conversation and get more relevant or better-formatted answers.

Let's consider an example. Imagine you are a teacher wanting to brainstorm a list of teaching strategies. Consider the following prompt:

By specifying that you want a "list" and an "overview," you set clear expectations for the LLM's response format. However, the prompt is still somewhat general, so the LLM may include a variety of strategy types and additional details. Let's consider a sample output of an LLM:

A part of the output is omitted for clarity. Notice that the output includes multiple types of strategies and implementation details, which may go beyond what you wanted if you were only interested in "active learning strategies" and a simpler format. This is not an error by the LLM, but rather a result of the prompt's generality.

One approach is to introduce a set of specific instructions. You can try to define all the details about the output format in your prompt. It could be very time-consuming. Let's take a look at a more efficient approach.

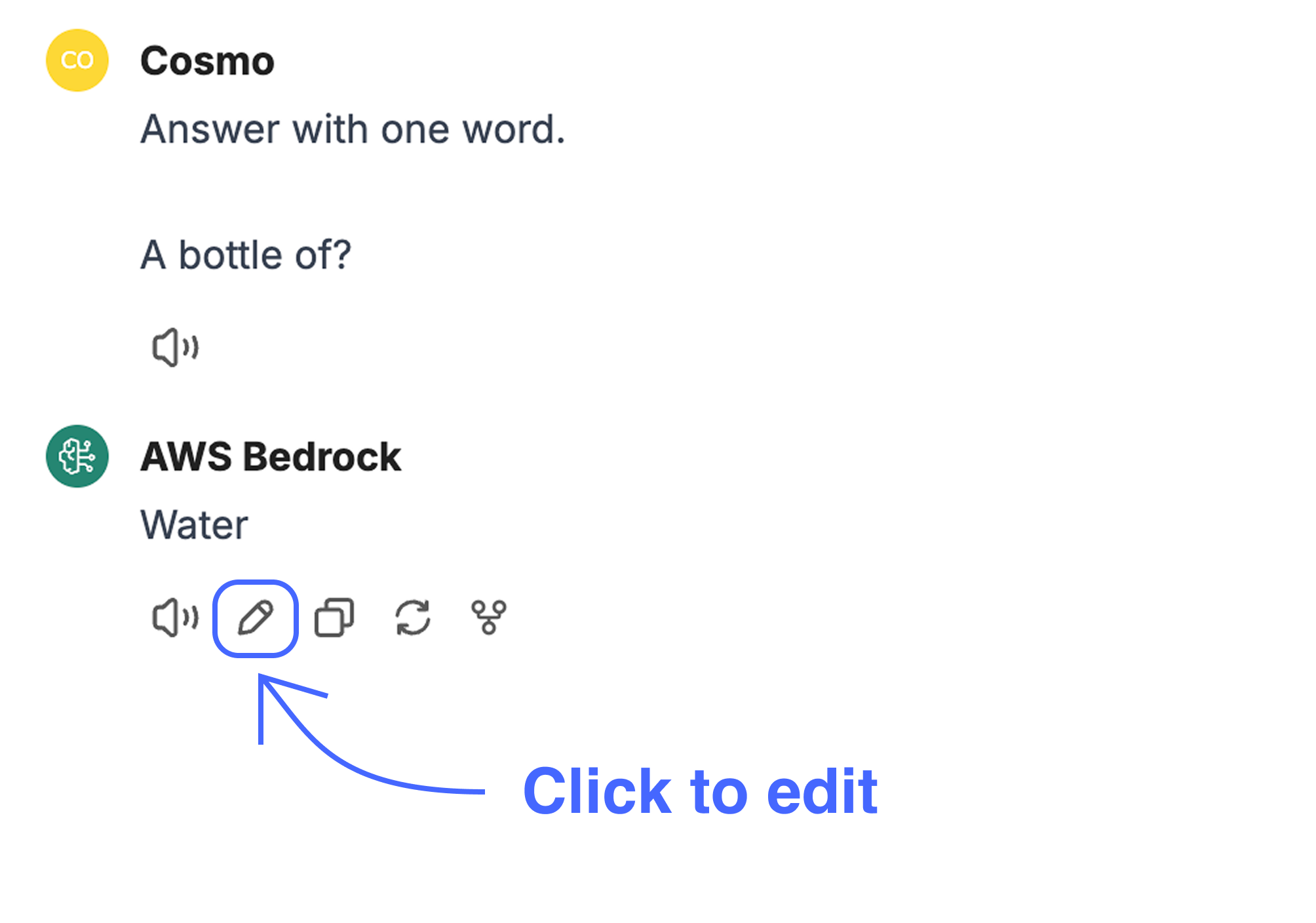

Let's consider this dialogue. You can modify the LLM's answer using the edit button.

A modern chat-based LLM works as follows: it takes the whole previous dialogue and predicts the following reply. This means that even slight modifications to previous responses can significantly impact subsequent outputs. If an LLM's response includes unnecessary details, restructuring the answer before continuing the dialogue ensures that future responses adhere more closely to the desired format. However, one potential limitation is that changes made to the response do not "erase" the model's original reasoning—they only shift its interpretation for future responses. If inconsistencies persist, resetting the conversation might be necessary.

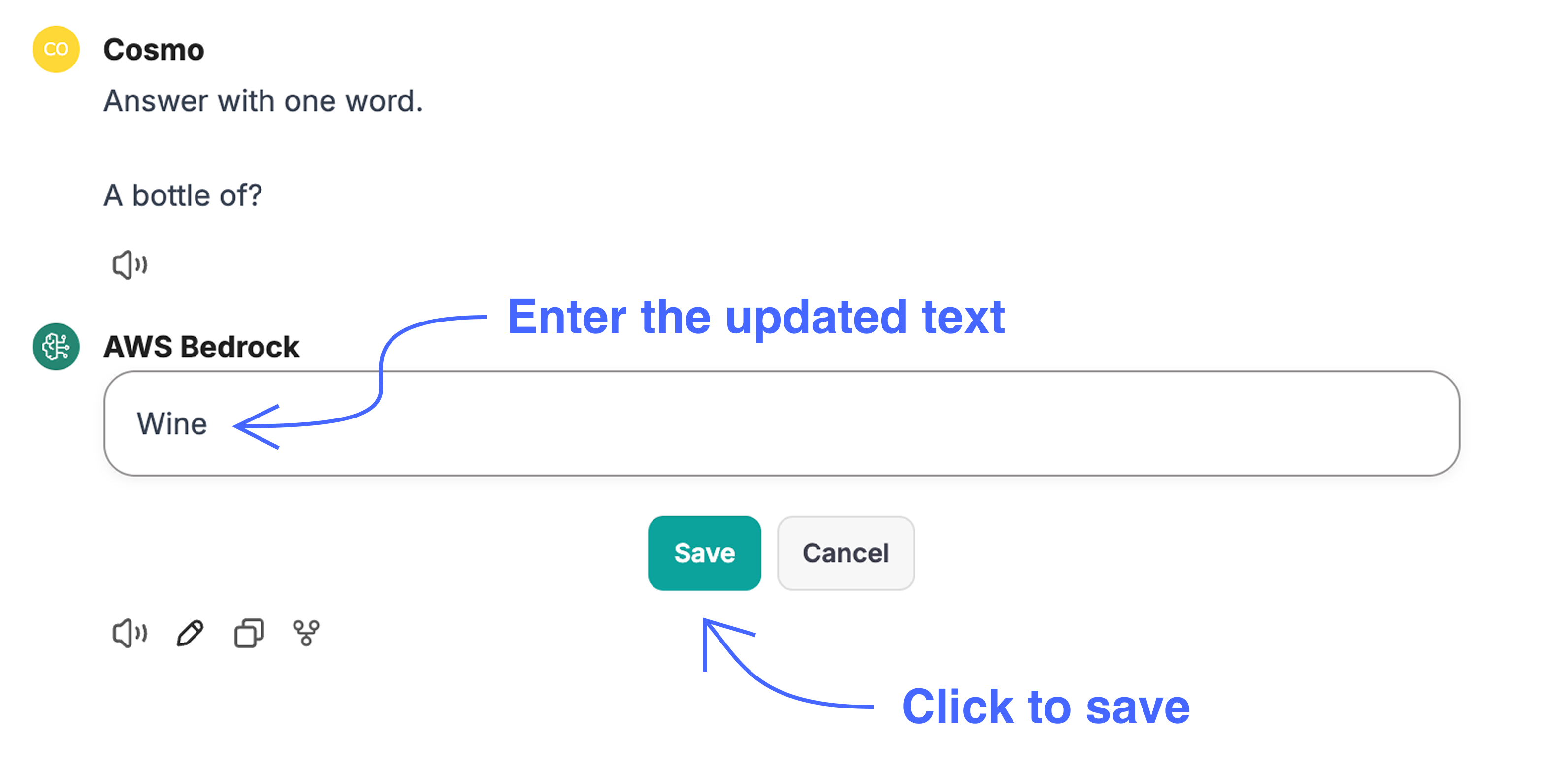

Once you have modified the answer, save it:

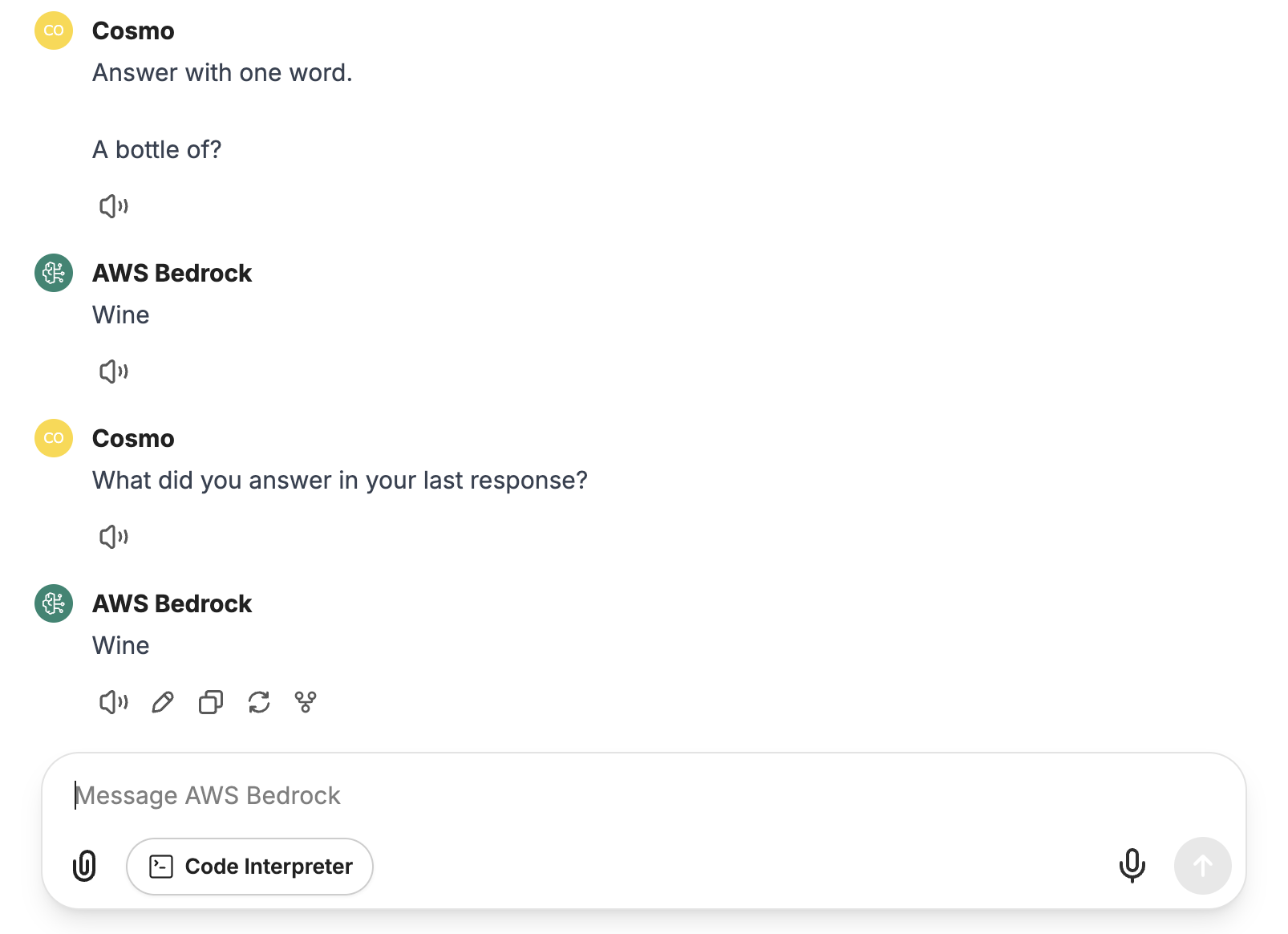

Let's test if LLM treats the new version of the answer as its answer:

Great!

When the initial response from an LLM doesn't fully meet your expectations, you can manually adjust the response to fit the desired format better. This process improves the clarity of the response and helps the LLM understand the task more effectively. When you ask the next question, the LLM will treat the modified answer as its initial answer and will likely answer using the same format.

For instance, let's reorganize the LLM's answer in the following way:

By removing initial context, implementation details, and other strategy types, we implicitly communicate the desired structure to the LLM.

Now, let's ask the following question in the same dialogue. For instance, we can run this prompt:

And here is the LLM's answer:

Note how the LLM answers in the desired format without specifying any additional constraints. By continuing the dialogue and refining the LLM's output, you can guide the LLM to better meet your needs.

Note: this approach can't guarantee the correct format. But it is usually faster than explicitly writing down all the constraints, so it can be very efficient, especially for resource creation or planning. We will see more of such examples in the practice session.

LLMs are probabilistic systems, which means their responses can vary each time you interact with them, even with the same prompt and context. Because of this, you might encounter unexpected results during practice, especially when using techniques like editing previous messages. The efficiency of such approaches comes with a cost of reliability.

If this happens, you can always rerun your prompt in a new chat or further refine your prompt to guide the model more clearly. Experimentation and iteration are a regular part of working with LLMs.

In this lesson, you learned how editing previous messages can help you refine the context and improve the quality of LLM responses. By recognizing when an answer isn't quite right and making targeted edits, you can guide the model to give more relevant and well-formatted answers in future turns.

You're now ready to practice these skills. In the next section, you'll get hands-on experience editing messages and seeing how your changes affect the LLM's responses. This is a key technique for anyone who wants to get the most out of language models — so let's get started!